NVIDIA has unveiled a new piece of software, NeMo Guardrails, that will ensure chatbots driven by large language models (LLMs) - such as ChatGPT (the engine of Microsoft's Bing AI) - stay on track in various ways.

The biggest problem the software sets out to resolve is what's known as 'hallucinations,' occasions when the chatbot goes awry and makes an inaccurate or even absurd statement.

These are the kind of incidents that were reported in the early usage of Bing AI and Google's Bard. They're often embarrassing episodes, frankly, which erode trust in the chatbot for obvious reasons.

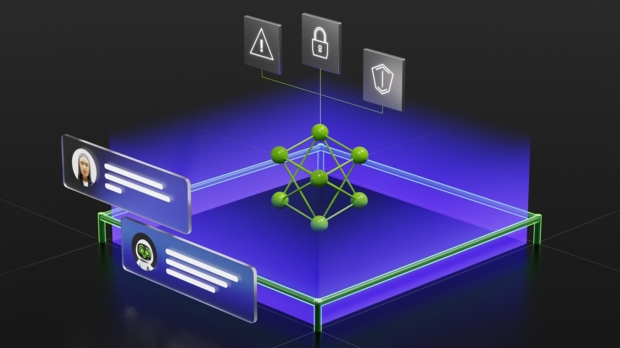

As ZDNet reports, NeMo Guardrails has been designed by NVIDIA to keep said chatbots "accurate, appropriate, on topic and secure", and there are three main 'guardrails' with the open source software. Namely safety, security, and topical guardrails.

The safety system is what deals with eliminating chatbot hallucinations and stamping out inaccuracies and misinformation being peddled by the bot, ensuring the AI refers to credible sources when citing material in responses.

As for topical guardrails, these can be used to stop the chatbot from straying outside of specific topics that it's designed to tackle, again helping curb instances of odd behavior no doubt. As when pushed to fringe topics, this is when the AI can go off the rails with its responses again.

Along with these, security guardrails are in place to prevent connecting to external apps which are flagged up as suspicious or unsafe, NVIDIA tells us.

NeMo framework

We don't know when the NeMo Guardrails software will be released, but when that happens, it'll be included with the NVIDIA NeMo framework (which is open source code on GitHub). Developers will therefore be able to use it widely to help tame the worst excesses of their AI projects, or at least that's the idea - NeMo Guardrails will be compatible with a wide range of LLM-enabled apps.

NVIDIA observes:

"Virtually every software developer can use NeMo Guardrails - no need to be a machine learning expert or data scientist. They can create new rules quickly with a few lines of code."

This sounds like a welcome development in terms of policing chatbots and ensuring they don't go off on tangents or completely get things wrong, which is an obvious concern for AI entities with no oversight.

Indeed, we've recently heard calls from a European watchdog to investigate whether AI poses a risk to consumers, and what steps should be taken to protect the public against said perils.

The European Consumer Organisation (BEUC) is particularly worried about the effect that chatbots might have on younger people and children, who may be prone to believing that an AI is authoritative or indeed even infallible, when clearly mistakes can be made. NVIDIA's software, however, may ensure that the latter is a rare occurrence.